PeterJohnPyTorch

iPhone / developpeurs

Now You Can Use " PyTorch " on your iPhone even when you are in the train

or when your iPhone is OffLine.

****Why we Need " PyTorch " on the Edge Device such as iPhone??;

When "Torch" is a Lamp, "iPhone" becomes a Lamp Stand.

even When you are in the train or when your iPhone is OffLine.

So doNot put "Torch" on any Basket ( Hidden Place ) but put on your "iPhone".

So that the Light of the Lamp will Shine Before Others.

Scripture( Matthew 5:13;14-16 ) Says,

When the Light is Put on the Hill,

the City on the Hill canNot be Hidden.

****Matthew 5:13;14-16, ESV;

5:13 “You are the salt of the earth, but if salt has lost its taste,

how shall its saltiness be restored?

5:14 “You are the light of the world. A city set on a hill canNot be hidden.

5:15 Nor do people light a lamp and put it under a basket, but on a stand,

and it gives light to all in the house.

5:16 In the same way, let your light shine before others,

so that they may see your good works

and give glory to your Father who is in heaven.

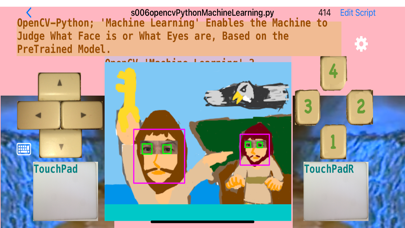

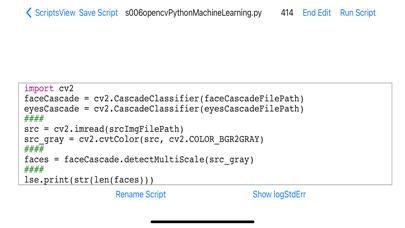

****TutorialSeason007;

We Prepared some of Examples

to tell you what you Can do Using PeterJohnPyTorch.

s001QuestAnswerLibTorch.py;

This isNot PyTorch but just LibTorch.

QuestionAnswering Demo with LibTorch.

You canNot Customize almost Anything

'cause LibTorch is Called Via Swift

and 'cause Swift Needs to be Compiled

with XCode on MacOS.

This example Loads any Model from "qa360_quantized.ptl" file,

which includes "TorchScript".

s002ProvisionPyTorchTensor.py;

This Shows PeterJohnPyTorch Can Use Tensor.

s003ProvisionPyTorchAutoGradFoundation.py;

This Shows PeterJohnPyTorch Can Use AutoGrad.

s004ProvisionPyTorchAutoGrad.py;

This Shows PeterJohnPyTorch Can Use AutoGrad.

s005ProvisionPyTorchNN.py;

This Shows PeterJohnPyTorch Can Use torch.nn (NeuralNetwork).

s006ProvisionPyTorchNNoptimizer.py;

This Shows PeterJohnPyTorch Can Use torch.nn (NeuralNetwork)

and Optimizer.

This Uses SGD (Stochastic Gradient Descent ) as the Optimizer.

s007QuestAnswerPyTorch.py;

Now you Can See Not libTorch demo But PyTorch demo

about QuestionAnswering.

You can Customize, for example, Tokenizer

in "pjQuestionAnswering.py"

Using PyTorch on iPhone.

This example Loads any Model from "qa360_quantized.ptl" file,

which includes "TorchScript".

s008QuestAnswerTransformers.py;

QuestionAnswering Demo with PyTorch and Transformers.

After you touched "Run Script",

This example begins to Download "model.safetensors"(265.5MBytes),

We Recommend that you make a copy of "model.safetensors",

outside of this App "PeterJohnPyTorch",

using Apple's "Files.app".

So that, Even if you uninstalled this App,

After you installed this App "PeterJohnPyTorch" Again,

you can put back "model.safetensors" file to "/images" directory

of this App "PeterJohnPyTorch".

This example Loads any Model from "model.safetensors" file,

which is the Standard format of "HuggingFace".

****Some of Restrictions that We know Currently;

1) CanNot Trace any Model files using torch.jit.trace() Function;

Since Now "PeterJohnPyTorch" canNot Create any Traced Model,

Also it canNot Create either ".ptl"("PyTorch Lite" format) file

Nor ".pt"("PyTorch" format) file right now.

2) CanNot Script any Model files using torch.jit.script() Function;

Since Now "PeterJohnPyTorch" canNot Create any Scripted Model,

Also it canNot Create either ".ptl"("PyTorch Lite" format) file

Nor ".pt"("PyTorch" format) file right now.

Now you Need to

save any Model as "model.safetensors",

and use the Model via PyTorch or via Transformers,

Means Write the Logic via PyTorch or via Transformers.

3) Not Implement "MPS" (Metal Performance Shader) backends Yet;

So Now you Need to Specify "CPU" as Device.

The "CPU" backends is just the First Step Before the "GPU" backends.

****

Enjoy PeterJohnPyTorch even when you are in the train

or your iPhone is OffLIne.

--Yasushi Yassun Obata

Quoi de neuf dans la dernière version ?

's005ThreeDimSquash.py', 's006MMLshepherd.py" and "Edit" button at ScriptView Version;

we Added Three at this time.

**1) TutorialSeason002/s005ThreeDimSquash.py;

This shows how to use pjThreeDim,

how to Create 3D games using "PeterJohn" app.

Please touch "TouchPadLeft" and "UpCursor"

in order to play this game.

TouchPadLeft - move Racket;

UpCursor - Replay;

**2) TutorialSeason003/s006MMLshepherd.py;

we added two of APIs into pjSound;

import pjSound as sound

sound.startNote(midiChannel,midiNote,midiVelocity);

sound.stopNote(midiChannel,midiNote,midiVelocity);

and added two of Classes,

pjMMLshepherdClass

pjMMLsheepClass

in order to show how to use the API, sound.noteStart, sound.noteStop;

Please check the code of s006MMLshepherd.py

in order to how to use the classes, pjMMLshepherdClass, pjMMLsheepClass.

Now you Can Play MML( Music Macro Language ) using "PeterJohn" app.

**3) "Edit" button at the TopRight of "ScriptsView";

so that you Can Remove any Non Required File.

Best Regards,

--Yasushi Yassun Obata